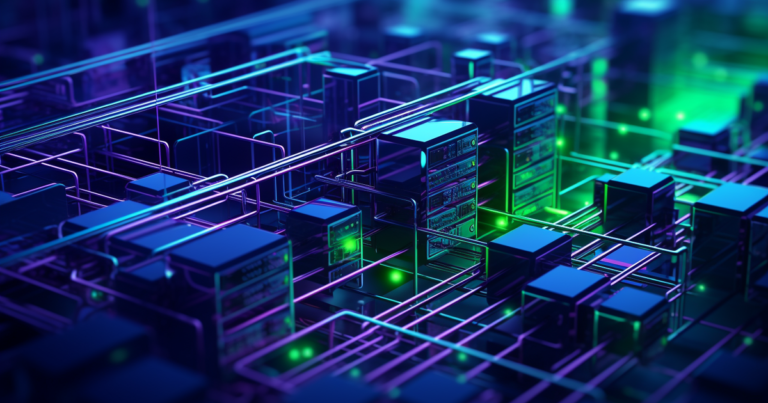

Optimizing AI Models with AWS: Unleashing the Full Potential of Artificial Intelligence.

This article provides a deep dive into the infrastructure and resources offered by AWS for optimizing AI models. It explores the various tools and services provided by AWS that can help enhance the performance and efficiency of AI models. By leveraging AWS’s powerful infrastructure and resources, organizations can effectively optimize their AI models to deliver better results and improve overall performance.

Understanding the Infrastructure Requirements for Optimizing AI Models with AWS

Optimizing AI models has become a crucial aspect of many industries, as businesses strive to leverage the power of artificial intelligence to gain a competitive edge. AWS, or Amazon Web Services, offers a comprehensive suite of tools and resources to help organizations optimize their AI models. In this article, we will take a deep dive into the infrastructure requirements for optimizing AI models with AWS.

To begin with, it is important to understand that AI models require significant computational power and storage capacity. AWS provides a range of infrastructure options to meet these requirements. One such option is Amazon EC2, which offers virtual servers in the cloud. These servers can be easily scaled up or down based on the workload, ensuring that the AI models have access to the necessary computational resources.

In addition to computational power, AI models also require large amounts of data for training and inference. AWS offers various storage options to accommodate these needs. Amazon S3, for example, provides scalable object storage that can store and retrieve any amount of data. This allows organizations to easily manage and access the vast datasets required for training AI models.

Furthermore, AWS provides specialized services for optimizing AI models. One such service is Amazon SageMaker, which is a fully managed machine learning service. SageMaker simplifies the process of building, training, and deploying AI models by providing a complete set of tools and frameworks. It also offers automatic model tuning, which optimizes the hyperparameters of the model to achieve better performance.

Another important aspect of optimizing AI models is the ability to experiment and iterate quickly. AWS provides services like AWS Lambda and AWS Batch, which enable organizations to run code without provisioning or managing servers. This serverless computing approach allows for rapid experimentation and iteration, as developers can focus on writing code rather than managing infrastructure.

To ensure the security and reliability of AI models, AWS offers a range of security and compliance services. For example, AWS Identity and Access Management (IAM) allows organizations to manage user access and permissions. AWS also provides encryption services to protect data at rest and in transit. These security measures are crucial for organizations that deal with sensitive data and need to comply with industry regulations.

In conclusion, optimizing AI models with AWS requires a robust infrastructure that can provide the necessary computational power and storage capacity. AWS offers a comprehensive suite of tools and resources to meet these requirements, including virtual servers, scalable storage, and specialized services like SageMaker. Additionally, AWS provides services for rapid experimentation and iteration, as well as security and compliance measures. By leveraging these infrastructure resources, organizations can effectively optimize their AI models and unlock the full potential of artificial intelligence.

Exploring the Resources and Tools Available for Optimizing AI Models on AWS

Optimizing AI models is a crucial step in ensuring their efficiency and accuracy. With the advent of cloud computing, platforms like Amazon Web Services (AWS) have become go-to solutions for AI developers. AWS offers a wide range of resources and tools that can be leveraged to optimize AI models, making them faster, more accurate, and cost-effective. In this article, we will take a deep dive into the infrastructure and resources provided by AWS for optimizing AI models.

One of the key resources offered by AWS is Amazon Elastic Compute Cloud (EC2). EC2 provides resizable compute capacity in the cloud, allowing developers to quickly scale their AI models based on demand. This flexibility is particularly useful when dealing with large datasets or computationally intensive tasks. By leveraging EC2, developers can optimize their AI models by distributing the workload across multiple instances, reducing processing time and improving overall performance.

Another valuable resource provided by AWS is Amazon Simple Storage Service (S3). S3 offers scalable object storage for developers to store and retrieve large amounts of data. When optimizing AI models, having access to vast amounts of training data is crucial. S3 allows developers to easily store and access datasets, enabling them to train their models more effectively. Additionally, S3 provides high durability and availability, ensuring that data is always accessible when needed.

To further optimize AI models, AWS offers Amazon Elastic Inference. This service allows developers to attach low-cost GPU-powered inference acceleration to EC2 instances. By offloading the computational workload to Elastic Inference, developers can achieve faster inference times without the need for expensive GPU instances. This resource is particularly useful when deploying AI models in production, where real-time inference is required.

AWS also provides Amazon SageMaker, a fully managed machine learning service. SageMaker offers a complete set of tools for building, training, and deploying AI models. With SageMaker, developers can easily experiment with different algorithms and hyperparameters, optimizing their models for maximum accuracy. The service also provides automatic model tuning, which uses machine learning to find the best combination of hyperparameters for a given model. By leveraging SageMaker, developers can streamline the optimization process and achieve better results in less time.

In addition to these resources, AWS offers a range of tools that can be used to optimize AI models. For example, AWS DeepLens is a deep learning-enabled video camera that can be used to train and deploy AI models at the edge. This allows developers to optimize their models for specific use cases, such as object detection or facial recognition, and deploy them directly on the device. Similarly, AWS DeepRacer is a fully autonomous 1/18th scale race car that developers can use to train reinforcement learning models. By competing in virtual races, developers can optimize their models for speed and performance.

In conclusion, AWS provides a comprehensive set of resources and tools for optimizing AI models. From scalable compute capacity with EC2 to object storage with S3, developers have access to the infrastructure needed to handle large datasets and computationally intensive tasks. Additionally, services like Elastic Inference and SageMaker enable developers to achieve faster inference times and better model accuracy. With tools like DeepLens and DeepRacer, developers can optimize their models for specific use cases and deploy them at the edge. By leveraging these resources and tools, developers can optimize their AI models on AWS, making them more efficient, accurate, and cost-effective.In conclusion, optimizing AI models with AWS involves a deep dive into infrastructure and resources. By leveraging the capabilities of AWS, organizations can effectively scale their AI models, improve performance, and reduce costs. AWS provides a wide range of services and tools that enable efficient training and deployment of AI models, such as Amazon EC2 instances, Amazon S3 for data storage, and Amazon SageMaker for model development. Additionally, AWS offers auto-scaling and cost optimization features to ensure optimal resource utilization. By utilizing these infrastructure and resources effectively, organizations can maximize the potential of their AI models and achieve better outcomes.